|

Inside Facebook's Blu-ray storage rack.

Facebook

We wrote on Wednesday about how Facebook has developed a prototype storage system that uses 10,000 Blu-ray discs to hold a petabyte of data. After that story posted we were able to talk to Frank Frankovsky, VP of hardware design and supply chain operations at Facebook, to find out just why he's so excited about the project. While the Blu-ray storage system is just a prototype, Facebook hopes to get it in production sometime this year and share the design with the Open Compute Project community to spur adoption elsewhere. If Facebook and others start using Blu-ray discs for long-term archival storage, Blu-ray manufacturers will see a new market opportunity and pursue it, Frankovsky said. |

Friday, January 31, 2014

Tuesday, January 28, 2014

A Beginner's Guide to Encryption: What It Is and How to Set it Up

SEXPAND

SEXPAND

You've probably heard the word "encryption" a million times before, but if you still aren't exactly sure what it is, we've got you covered. Here's a basic introduction to encryption, when you should use it, and how to set it up.

What Is Encryption?P

Encryption is a method of protecting data from people you don't want to see it. For example, when you use your credit card on Amazon, your computer encrypts that information so that others can't steal your personal data as its being transferred. Similarly, if you have a file on your computer you want to keep secret only for yourself, you can encrypt it so that no one can open that file without the password. It's great for everything from sending sensitive information to securing your email, keeping your cloud storage safe, and even hiding your entire operating system.

http://lifehacker.com/a-beginners-guide-to-encryption-what-it-is-and-how-to-1508196946

Monday, January 27, 2014

New NSA documents reveal massive data collection from mobile apps

300+ The Verge by Russell Brandom

"intercepting Google Maps queries made on smartphones"

The documents also specifically instruct agency staffers in "intercepting Google Maps queries made on smartphones, and using them to collect large volumes of location information." A 2010 documents also highlights Android phones as sending GPS information "in the clear" (without encryption), giving the NSA the user's location every time he or she pulls up Google Maps.

More advanced capabilities were also on display from the agency's targeted malware program. One slide lists targeted plugins to enable "hot mic" recording, high precision geo-tracking, and file retrieval which would reach any content stored locally on the phone. That includes text messages, emails and calendar entries. As the slide notes in a parenthetical aside, "if its [sic] on the phone, we can get it."

Scientists detect “spoiled onions” trying to sabotage Tor privacy network

Rogue Tor volunteers perform attacks that try to degrade encrypted connections.

by Dan Goodin - Jan 21 2014, 5:42pm EST

Enlarge / The structure of a three-hop Tor circuit.

Computer scientists have identified almost two dozen computers that were actively working to sabotage the Tor privacy network by carrying out attacks that can degrade encrypted connections between end users and the websites or servers they visit.

The "spoiled onions," as the researchers from Karlstad University in Sweden dubbed the bad actors, were among the 1,000 or so volunteer computers that typically made up the final nodes that exited the Tor—short for The Onion Router—network at any given time in recent months. Because these exit relays act as a bridge between the encrypted Tor network and the open Internet, the egressing traffic is decrypted as it leaves. That means operators of these servers can see traffic as it was sent by the end user. Any data the end user sent unencrypted, as well as the destinations of servers receiving or responding to data passed between an end user and server, can be monitored—and potentially modified—by malicious volunteers. Privacy advocates have long acknowledged the possibility that the National Security Agency and spy agencies across the world operate such rogue exit nodes.

The paper—titled Spoiled Onions: Exposing Malicious Tor Exit Relays—is among the first to document the existence of exit nodes deliberately working to tamper with end users' traffic (a paper with similar findings is here). Still, it remains doubtful that any of the 25 misconfigured or outright malicious servers were operated by NSA agents. Two of the 25 servers appeared to redirect traffic when end users attempted to visit pornography sites, leading the researchers to suspect they were carrying out censorship regimes required by the countries in which they operated. A third server suffered from what researchers said was a configuration error in the OpenDNS server.

The remainder carried out so-called man-in-the-middle (MitM) attacks designed to degrade encrypted Web or SSH traffic to plaintext traffic. The servers did this by using the well-known sslstrip attack designed by researcher Moxie Marlinspike or another common MitM technique that converts unreadable HTTPS traffic into plaintext HTTP. Often, the attacks involved replacing the valid encryption key certificate with a forged certificate self-signed by the attacker.

"All the remaining relays engaged in HTTPS and/or SSH MitM attacks," researchers Philipp Winter and Stefan Lindskog wrote. "Upon establishing a connection to the decoy destination, these relays exchanged the destination's certificate with their own, self-signed version. Since these certificates were not issued by a trusted authority contained in TorBrowser's certificate store, a user falling prey to such a MitM attack would be redirected to the about:certerror warning page."

From Russia with love

The 22 malicious servers were among about 1,000 exit nodes that were typically available on Tor at any given time over a four-month period. (The precise number of exit relays regularly changes as some go offline and others come online.) The researchers found evidence that 19 of the 22 malicious servers were operated by the same person or group of people. Each of the 19 servers presented forged certificates containing the same identifying information. The virtually identical certificate information meant the MitM attacks shared a common origin. What's more, all the servers used the highly outdated version 0.2.2.37 of Tor, and all but one of the servers were hosted in the network of a virtual private system providers located in Russia. Several of the IP addresses were also located in the same net block.

The researchers caution that there's no way to know that the operators of the malicious exit nodes are the ones carrying out the attacks. It's possible the actual attacks may be carried out by the ISPs or network backbone providers that serve the malicious nodes. Still, the researchers discounted the likelihood of an upstream provider of the Russian exit relays carrying out the attacks for several reasons. For one, the relays relied on a diverse set of IP address blocks, including one based in the US. The relays frequently disappeared after they were flagged as untrustworthy, researchers also noted.

The researchers identified the rogue volunteers by scanning for server relays that replaced valid HTTPS certificates with forged ones. That might have helped to detect certificate forgery attacks such as the one used in 2011 to monitor 300,000 Gmail users—wouldn't be detected using the methods devised by the researchers. The researchers don't believe the malicious nodes they observed were operated by the NSA or other government agencies.

"Organizations like the NSA have read/write access to large parts of the Internet backbone," Karlstad University's Winter wrote in an e-mail. "They simply do not need to run Tor relays. We believe that the attacks we discovered are mostly done by independent individuals who want to experiment."

While the confirmation of malicious exit nodes is novel important, it's not particularly surprising. Tor officials have long warned that Tor does nothing to encrypt plaintext communications before it enters or once it leaves the network. That means ISPs, remote sites, VPN providers, and the Tor exit relay itself can all see the communications that aren't encrypted by end users and the parties they communicate with. Tor officials have long counseled users to rely on HTTPS, e-mail encryption, or other methods to ensure that traffic receives end-to-end encryption.

The researchers have proposed a series of updates to the "Torbutton" software used by most Tor users. Among other things, the proof-of-concept software fix would use an alternative exit relay to refetch all self-signed certificates delivered over Tor. The software would then compare the digital fingerprints of the two certificates. It's feasible that the changes might one day include certificate pinning, a technique for ensuring that a certificate presented by Google, Twitter, and other sites is the one authorized by the operator rather than a counterfeit one. Several hours after this article went live, Winter published this blog post titled What the "Spoiled Onions" paper means for Tor users.

Wednesday, January 22, 2014

Feds: Thieves with Bluetooth-enabled data skimmers stole over $2 million

13 men were charged with identity theft in a Manhattan District Court.

by Megan Geuss - Jan 21 2014, 10:55pm EST

On Tuesday, 13 men were charged in a case involving Bluetooth-enabled data skimmers planted on gas station pumps. The hackers allegedly made more than $2 million by downloading ATM information from the gas pumps, and then using that data to withdraw cash from ATMs in Manhattan.

According to Manhattan District Attorney Cyrus R. Vance, the suspects used credit card skimming devices that were Bluetooth-enabled and internally installed on pumps at Raceway and Racetrac gas stations throughout Texas, Georgia, and South Carolina. Because of this configuration, the skimmers were invisible to people who paid at the pumps, and later, the suspects were able to download the skimmed data without physically removing the devices.

Between March 2012 and March 2013, the suspects used forged credit cards to withdraw money at ATMs around Manhattan and deposited the cash in New York bank accounts, feds say. Then, other members of the group withdrew the money from those bank accounts in California or Nevada.

"Each of the defendants’ transactions was under $10,000,” wrote the Manhattan District Attorney's office. “They were allegedly structured in a manner to avoid any cash transaction reporting requirements imposed by law and to disguise the nature, ownership, and control of the defendants’ criminal proceeds. From March 26, 2012, to March 28, 2013, the defendants are accused of laundering approximately $2.1 million.”

Four of the 13 men—Garegin Spartalyan, 40, Aram Martirosian, 34, Hayk Dzhandzhapanyan, 40, and Davit Kudugulyan, 42—are considered lead defendants and are charged with money laundering, theft, and possession of a forgery device and forgery instruments. The other men are are each charged with two counts of money laundering.

Hackers Swiped 70,000 Records from Healthcare.gov in Four Minutes (Updated)

David Kennedy, a white hat hacker and TrustedSec CEO, has been warning anyone who would listen since November that the flawed government website was highly insecure. Now, after using passive reconnaissance, "which allowed [him to query and look at how the website operates and performs,"

Kennedy revealed that he was able to access 70,000 records in under four minutes, granting him access to information such as names, social security numbers, email addresses, and home addresses just to name a few. What's more, he didn't even technically have to hack into the website at all.After the bevy of problems Healthcare.gov encountered in its first few months of life, dumping one more onto the pile shouldn't phase you all that much, right? Well, not if that hiccup is actually a gaping vulnerability—and one that can

grant hackers access to over 70,0000 private records in just four minutes, at that.

In talking to Fox News Sunday, Kennedy explained what he believed to be the source of the problem:

The problem is if you look at the integration between the IRS, DHS, third party credit verification processes, you have all of these different organizations that feed into this data hub for the healthcare.gov infrastructure to provide all that information and validate everything. And so if an attacker gets access to that, they basically have full access into your entire online identity, everything that you do from taxes to, you know, what you pay, what you make, what DHS has on you from a tracking perspective as well as obviously, you know, what we call personal identifiable information which is what an attacker would use to take a line of credit out from your account. It's really damaging.

Still, Teresa Fryer, the chief information security officer for the Centers of Medicaid and Medicare Services, testified before the House Oversight Committee claiming that cybersecurity testing had be successfully completed and that "there have been no successful attacks on the site."

Of course, claiming that there's been no attacks on the site doesn't inspire much confidence when the information is accessible without ever entering the site in the first place.

Update 5:00PM EST: David Kennedy has taken to the TrustedSec website to clarify that it was not, in fact, 70,000 records that were swiped. Rather, that number was simply "tested for as an example through utilizing Google's advanced search." Kennedy's full update follows: There's been a few stories running around in the media around accessing 70,000 records on the healthcare.gov website. Just to note on this, we never accessed 70,000 records nor is it directly on the healthcare.gov website (a sub-site for the infrastructure). The number 70,000 was a number that was tested for as an example through utilizing Google's advanced search functionality as well as normally browsing the website. No dumping of data, malicious intent, hacking, or even viewing of the information was done. We do not support the statements from the news organizations. From a previous blog post, the information shown in the python script was sanitized and not used through Google scraping (urllib2 python module). We've reached out to the news agencies to clarify as these were not our words.Thursday, January 16, 2014

Point-of-sale malware infecting Target found hiding in plain sight

KrebsOnSecurity's Brian Krebs uncovers "memory-scraping" malware on public site.

by Dan Goodin - Jan 15 2014, 8:22pm EST

Independent security journalist Brian Krebs has uncovered important new details about the hack that compromised as many as 110 million Target customers, including the malware that appears to have infected point-of-sale systems and the way attackers first broke in.

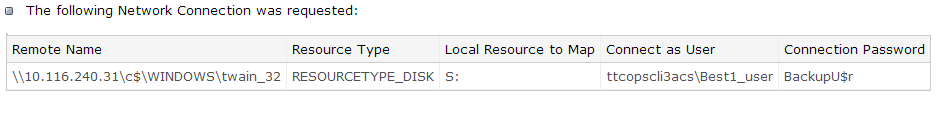

According to a post published Wednesday to KrebsOnSecurity, point-of-sale (POS) malware was uploaded to Symantec-owned ThreatExpert.com on December 18, the same day that Krebs broke the news of the massive Target breach. An unidentified source told Krebs that the Windows share point name "ttcopscli3acs" analyzed by the malware scanning website matches the sample analyzed by the malware scanning website. The thieves used the user name "Best1_user" to log in and download stolen card data. Their password was "BackupU$r".

KrebsonSecurity

The class of malware identified by Krebs is often referred to as a memory scraper, because it monitors the computer memory of POS terminals used by retailers. The malware searches for credit card data before it has been encrypted and sent to remote payment processors. The malware then "scrapes" the plain-text entries and dumps them into a database. Krebs continued:

According to a source close to the investigation, that threatexpert.com report is related to the malware analyzed at this Symantec writeup (also published Dec. 18) for a point-of-sale malware strain that Symantec calls "Reedum" (note the Windows service name of the malicious process is the same as the ThreatExpert analysis "POSWDS"). Interestingly, a search in Virustotal.com—a Google-owned malware scanning service—for the term “reedum” suggests that this malware has been used in previous intrusions dating back to at least June 2013; in the screen shot below left, we can see a notation added to that virustotal submission, “30503 POS malware from FBI."The source close to the Target investigation said that at the time this POS malware was installed in Target's environment (sometime prior to Nov. 27, 2013), none of the 40-plus commercial antivirus tools used to scan malware at virustotal.com flagged the POS malware (or any related hacking tools that were used in the intrusion) as malicious. “They were customized to avoid detection and for use in specific environments,” the source said.That source and one other involved in the investigation who also asked not to be named said the POS malware appears to be nearly identical to a piece of code sold on cybercrime forums called BlackPOS, a relatively crude but effective crimeware product. BlackPOS is a specialized piece of malware designed to be installed on POS devices and record all data from credit and debit cards swiped through the infected system.According the author of BlackPOS—an individual who uses a variety of nicknames, including “Antikiller”—the POS malware is roughly 207 kilobytes in size and is designed to bypass firewall software. The barebones “budget version” of the crimeware costs $1,800, while a more feature-rich “full version”—including options for encrypting stolen data, for example—runs $2,300.

Krebs went on to report that sources told him the attackers broke into Target after hacking a company Web server. From there, the attackers somehow managed to upload the POS malware to the checkout machines located at various stores. The sources said the attackers appeared to then establish a control server inside Target's internal network that "served as a central repository for data hoovered by all of the infected point-of-sale devices." The attackers appear to have had persistent access to the internal server, an ability that allowed them to periodically log in and collect the pilfered data.

The details haven't been independently verified by Ars. That said, Wednesday's report is consistent with what's already known about the compromise. For instance, last week's news that the attackers also made off with names, mailing addresses, phone numbers, and e-mail addresses of Target customers already suggested the hackers had intruded deep inside Target's network and that the point-of-sale malware was just one of the tools used to extract sensitive data. Word that the intruders gained initial access through Target's website is also consistent with what's known about previous hacks on large holders of payment card data. Court documents filed in 2009 against now-convicted hacker Albert Gonzalez said one of the ways his gang compromised Heartland Payment Systems, as well as retailers 7-Eleven and Hannaford Brothers, was by casing their websites and exploiting SQL-injection vulnerabilities.

Wednesday, January 15, 2014

The wrong words: how the FCC lost net neutrality and could kill the internet

200+ The Verge by Nilay Patel

That was the overwhelming message delivered to the FCC by the DC Circuit yesterday when it ruled to vacate the agency’s net neutrality rules. The FCC had tried to impose so-called “common carrier” regulations on broadband providers without officially classifying them as utilities subject to those types of rules, and the court rejected that sleight of hand. Most observers saw the decision coming months, if not years, ago; Cardozo Law School’s Susan Crawford called the FCC’s position a “house of cards.” I’ll be a little more clear: it’s bullshit. Bullshit built on cowardice and political expediency instead of sound policymaking. Bullshit built on the wrong words.

Since 1980, the FCC has divided communication services into basic and enhanced categories; phone lines, with their "pure" transmission, are basic, while services like web hosting, which process information, are enhanced. Only basic services are subject to what are known as common carrier laws, which stop carriers from discriminating against or refusing service to customers. Over time, those categories matured and gained new names: the basic services were tightly regulated under Title II of the Telecommunications Act as "telecommunications services," while enhanced services were regulated under the much weaker Title I as "information services."

What is net neutrality?

At its simplest, net neutrality holds that just as phone companies can’t check who’s on the line and selectively block or degrade the service of callers, everyone on the internet should start on roughly the same footing: ISPs shouldn’t slow down services, block legal content, or let companies pay for their data to get to customers faster than a competitor’s.

In this case, we’re also talking about a very specific policy: the Open Internet Order, which the FCC adopted in 2010. Under the order, wired and wireless broadband providers must disclose how they manage network traffic. Wired providers can’t block lawful content, software, services, or devices, and wireless providers can’t block websites or directly competing apps. And wired providers can’t "unreasonably discriminate" in transmitting information. The FCC has been trying in one way or another to implement net neutrality rules since 2005, but this latest defeat is the second time its principles have been put to the test and failed.

Timeline 1980

The FCC adopts its "Computer II" policies, establishing separate rules for "basic" and "enhanced" communications services. Basic services are subject to "common carrier" rules, which stop them from blocking or discriminating against traffic over their networks.

1996

The new Telecommunications Act creates more specific terms. Basic services are now called Title II "telecommunications carriers," which simply transmit information, and enhanced services that offer interactive features are classified as Title I "information service providers." DSL companies are classified as carriers, while AOL-style internet portals fall under information services.

2002

After legal confusion, cable broadband is defined as an information service, effectively exempting the most popular consumer internet providers from common carrier rules.

2005

A court decision upholds the FCC's definitions, but DSL and wireless are reclassified as information services. The FCC establishes its first set of "open internet" rules, four principles that grant users the right to access any lawful content and use any devices and services they want on a network.

2007

Comcast is found to be slowing down BitTorrent traffic, hurting customers’ ability to use the service.

2008

The FCC requires Comcast to change its policy, but Comcast files suit to overturn the order, arguing that the FCC has no authority to censure it.

April 2010

FCC chair Julius Genachowski proposes two new open internet principles: non-discrimination, which would stop carriers from slowing particular services, and transparency, which would require them to make their network management practices public. His idea is to take specific rules that govern Title II telecommunications carriers and apply them to Title I information service providers.

December 2010

After its defeat in court, the FCC revises its standards and releases Genachowski’s Open Internet Order, justifying it as a necessary move to promote broadband adoption. Broadband companies are still classified as information service providers.

November 2011

The Open Internet rules go into effect, barring wired broadband providers from blocking, slowing, or prioritizing traffic in most cases. In a compromise, wireless carriers were exempted from these rules.

July 2012

Verizon and MetroPCS appeal the order.

January 14th, 2014

The DC court once again rules against the FCC, striking down its anti-blocking and anti-discrimination requirements in an almost complete victory for Verizon. The court says that the FCC has proven that broadband providers represent a threat to internet openness, but that the government can’t impose common carrier rules on information service providers.

The FCC’s first attempt to regulate broadband providers consisted of four "open internet" principles adopted in 2005. They were meant to "encourage broadband deployment and preserve and promote the open and interconnected nature of the public internet" by stopping companies like AT&T and Comcast from blocking devices or services. In 2007 the house of cards started tumbling down. Comcast customers found that the company was drastically slowing BitTorrent speeds and the FCC took action, slapping it with an order to stop the throttling and tell subscribers exactly how it managed their traffic. Comcast agreed to the plan, but it took the whole issue to court, making a point that would come up again and again: the FCC’s justification for the open internet principles were vague at best. The issue was so contentious and so important to the titans of the internet and media industries that net neutrality became an issue in the 2008 presidential election, with Obama issuing his support for an open internet. Even with Obama’s support, Comcast beat the FCC in 2010 when Judge David Tatel — the same judge who wrote yesterday’s net neutrality decision — found that the agency lacked the authority to enforce the open internet principles because Comcast was an information service provider, not a telecommunications provider. The FCC had used the wrong words. Too bad you used the wrong fucking wordsThe timing could hardly have been worse. Newly appointed FCC chairman Julius Genachowski had just started work on a revamped version of the open internet rules, adding two principles that directly addressed Comcast's actions: companies couldn't discriminate against traffic by slowing it beyond what was necessary to keep a network running, and they had to be transparent about any reasonable management. When the Comcast decision came down in early 2010, the FCC scrambled to build a stronger framework, but Genachowski insisted on building consensus with the industry and refused to reclassify broadband as a telecommunications service, and further compromised by exempting mobile providers from the regulations. He arrived at the Open Internet Order, which enshrined transparency and non-discrimination but was still built on the wrong words. Critics like Susan Crawford referred to the plan as "once more, with feeling." Comcast was happy, but Verizon wasn’t; it took the FCC to court once again. And as we saw yesterday, Genachowski’s Open Internet Order didn't stand up any better. No matter how the FCC defends its rules, net neutrality regulations for information services look a whole lot like common carrier rules for telecommunications providers — and all Verizon had to do was point that out. That’s it. That’s the whole mistake. The wrong words. The entire American internet experience is now at risk of turning into a walled garden of corporate control because the FCC chickened out and picked the wrong words in 2002, and the court called them on it twice over. You used the wrong words. The court even agreed with the FCC’s policy goals — after a bitterly fought lawsuit and thousands of pages of high-priced arguments from Verizon and its supporters, Judge Tatel was convinced that "broadband providers represent a threat to internet openness and could act in ways that would ultimately inhibit the speed and extent of future broadband deployment." Too bad you used the wrong fucking words. What happens now is entirely dependent on whether the FCC’s new chairman, Tom Wheeler, has the courage to stand up and finally say the right words — that broadband access is a telecommunications service that should be regulated just like landline phones. He need only convince two additional FCC commissioners to agree with him, and the argument is simple: consumers already perceive internet service as a utility, and it’s advertised only on the commodity basis of speed and price. But the political cost will be incredible. "Broadband providers represent a threat to internet openness."National Cable and Telecommunications Association CEO Michael Powell — the former FCC chairman who issued the 2005 open internet rules — has said that any attempts to reclassify broadband as a common carrier telecommunications service will be "World War III." That’s not an idle threat: the NCTA is a powerful force in the industry, and it counts major companies like Comcast and Verizon as members. That’s a lot of influence to throw around — not only does Comcast lobby and donate freely, but it also owns NBCUniversal, giving it the kind of power over the American political conversation few corporations have even dared to dream about. Put enough pressure on Congress, and they’ll start making noise about the FCC’s budget — a budget Wheeler needs to hold his upcoming spectrum auctions, which have until now been the cornerstone of his regulatory agenda. So, this is going to be a chaos. All you’re going to hear from now on is that net neutrality proponents want to "regulate the internet," a conflation so insidious it boggles the mind.Comcast and Time Warner Cable and Verizon are not the internet. We are the internet — the people. It is us who make things like Reddit and Facebook and Twitter vibrant communities of unfiltered conversation. It is us who wield the unaffected market power that picks Google over Bing and Amazon over everything. It’s us who turned Netflix from a DVD-by-mail company into a video giant that uses a third of the US internet’s bandwidth each night. And it is us who can quit stable but boring corporate jobs to start new businesses like The Verge and Vox Media without anyone’s permission. Comcast and Verizon are just pipes. The dumber the better. It’s time to start using the right words. Sidebar and additional reporting by Adi Robertson. |

NSA uses covert radio transmissions to monitor thousands of bugged computers

100+ Ars Technica by Megan Geuss

The National Security Operations Center at NSA, photographed in 2012—the nerve center of the NSA's "signals intelligence" monitoring.

Based on information found in NSA documents and gathered from “computer experts and American officials,” the Times confirmed information that came to light toward the end of December regarding several of the technologies that were available to the NSA as of 2008. The NSA's arsenal, the Times wrote, includes technology that “relies on a covert channel of radio waves that can be transmitted from tiny circuit boards and USB cards inserted surreptitiously into the computers. In some cases, they are sent to a briefcase-size relay station that intelligence agencies can set up miles away from the target.”

The German Publication Der Spiegel published an interactive graphic two weeks ago detailing many of the ways that the NSA uses hardware to spy on its targets, including a “range of USB plug bugging devices,” which can be concealed in a common keyboard USB plug, for example. As with any hardware, though, many of these spying tools “must be physically inserted by a spy, a manufacturer, or an unwitting user.” Der Spiegel writes that the NSA refers to this physical implant as “interdiction,” and it “involves installing hardware units on a targeted computer by, for example, intercepting the device when it’s first being delivered to its intended recipient.”

Sound too theoretical to make you care about filesystems? Let's talk about "bitrot," the silent corruption of data on disk or tape. One at a time, year by year, a random bit here or there gets flipped. If you have a malfunctioning drive or controller—or a loose/faulty cable—a lot of bits might get flipped. Bitrot is a real thing, and it affects you more than you probably realize. The JPEG that ended in blocky weirdness halfway down? Bitrot. The MP3 that startled you with a violent CHIRP!, and you wondered if it had always done that? No, it probably hadn't—blame bitrot. The video with a bright green block in one corner followed by several seconds of weird rainbowy blocky stuff before it cleared up again? Bitrot. The worst thing is that backups won't save you from bitrot. The next backup will cheerfully back up the corrupted data, replacing your last good backup with the bad one. Before long, you'll have rotated through all of your backups (if you even have multiple backups), and the uncorrupted original is now gone for good. |

Thursday, January 9, 2014

Security Essentials for Windows XP will die when the OS does

The antivirus software will stop getting updates, and you won't be able to install it.

by Peter Bright - Jan 8 2014, 4:38pm EST - From Ars Technica

There are three months to go for Windows XP. The ancient operating system is leaving extended support on April 8, at which point Microsoft will no longer ship free security fixes. XP itself isn't the only thing that's losing support on that date. The Windows XP version of Microsoft Security Essentials, the company's anti-malware app, will stop receiving signature updates on that date and will also be removed for download.

The message is clear: after April 8, Windows XP will be insecure, and Redmond isn't going to provide even a partial remedy for the security issues that will arise. Antivirus software is just papering over the cracks if the operating system itself isn't getting fixed.

In contrast, both Google and Mozilla will provide updates for Chrome and Firefox beyond the cessation of Microsoft's support. Google has committed to supporting Chrome until April 2015.

With three months to go and Windows XP still holding almost a thirty-percent usage share of the Web, the ending of support is going to have an impact on a lot of people. Still, it's unlikely that killing off MSE is going to be the straw that breaks the camel's back and forces these Windows XP holdouts to upgrade.

The big question is, what will? XP's end of life shouldn't come as a surprise to anyone, but there are plenty of XP users who evidently don't care. There's no chance now that the remaining users will migrate off the operating system in the few remaining months of support. An abundance of insecure, exploitable, and most likely exploited Windows XP machines is now an inevitability.

Peter Bright / Peter is a Microsoft Contributor at Ars. He also covers programming and software development, Web technology and browsers, and security. He is based in Houston, TX.

Thursday, January 2, 2014

SD Card Hack Shows Flash Storage Is Programmable: Unreliable MemoryTechnabob by Lambert Varias

Ever wonder why SD cards are dirt cheap? At the 2013 Chaos Computer Congress, a hacker going by the moniker Bunnie recently revealed part of the reason: “In reality, all flash memory is riddled with defects — without exception.” But that tidbit is nothing compared to the point of his presentation, in which he and fellow hacker Xobs revealed that SD cards and other flash storage formats contain programmable computers.

Apparently flash storage manufacturers use firmware to manage how data is stored as well as to obscure the chip’s shortcomings. For instance, Bunnie claims that some 16GB chips are so damaged upon manufacture that only 2GB worth of data can be stored on them. But instead of being thrashed, they’re turned into 2GB cards instead. In order to obscure things like that – as well as to handle the aforementioned increasingly complex data abstraction – SD cards are loaded with firmware. Long story short, Bunnie and Xobs found out that the microcontrollers in SD cards can be used to deploy a variety of programs – both good and bad – or at least tweak the card’s original firmware. For instance, while researching in China, Bunnie found SD cards in some electronics shops that had their firmware modified. The vendors “load a firmware that reports the capacity of a card is much larger than the actual available storage.” The fact that those cards were modified supports Bunnie and Xobs’ claim: that other people besides manufacturers can manipulate the firmware in SD cards. Turns out the memories of our computers are as unreliable as ours. [via Bunnie via BGR] |

Subscribe to:

Comments (Atom)